Today Panasonic Automotive Systems has announced an OpenChain ISO/IEC 5230 conferment program. As a leading Tier 1 automotive supplier, Panasonic Automotive Systems is at the forefront of both using and effectively managing open source technology.

“During the certification process, we worked to improve the reliability of our OSS usage and products by structuring OSS utilization processes and building a highly secure management system.” said Masashige Mizuyama, Executive Vice President and Chief Technology Officer at Panasonic Automotive Systems. “We have actively contributed to the industry by promoting the standardization and open-sourcing of VirtIO, an open-source virtualization technology. Taking this certification as an opportunity, we will continue to provide high-quality and highly reliable solutions leveraging OSS, and contribute to the expansion and sustainable growth of the open source ecosystem in the in-vehicle device industry.”

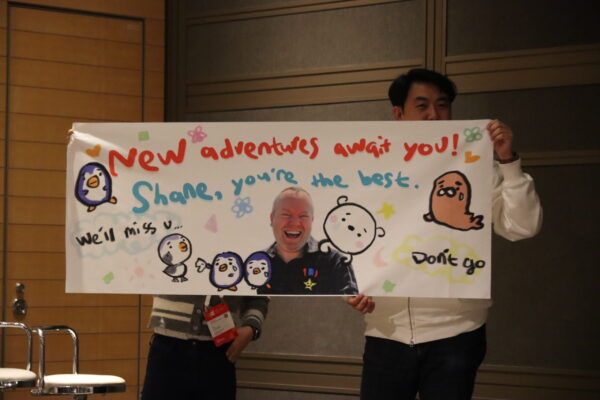

“We are delighted to welcome Panasonic Automotive Systems into our community of conformance,” says Shane Coughlan, OpenChain General Manager. “Adoption of OpenChain ISO/IEC 5230 has been exceptional across the automotive supply chain, and the influence and inspiration provided by Tier 1 adoption cannot be overstated. We look forward to working with the Panasonic Automotive Systems team in the months and years ahead.”

About Panasonic Automotive Systems Co., Ltd.:

Panasonic Automotive Systems Co., Ltd., (PAS) was launched on April 1, 2022 as an operating company responsible for the automotive systems business in line with the start of the Panasonic Group’s operating company system, and on December 2, 2024 the company moved to a management structure in which 80% of its shares are held by the funds managed by an affiliate of Apollo Global Management, Inc. and 20% by Panasonic Holdings Corporation.

Headquartered in Japan, PAS is a global company with subsidiaries in eight other countries and, as a Tier 1 company, it provides advanced proprietary technologies such as infotainment systems to automakers in Japan and overseas, helping to create comfortable, safe, and secure automobiles. PAS is committed to meeting the expectations of its customers around the world with technologies that stand by people in pursuit of its corporate vision of becoming the “Joy in Motion” design company. To learn more about our company, please visit https://automotive.panasonic.com/en

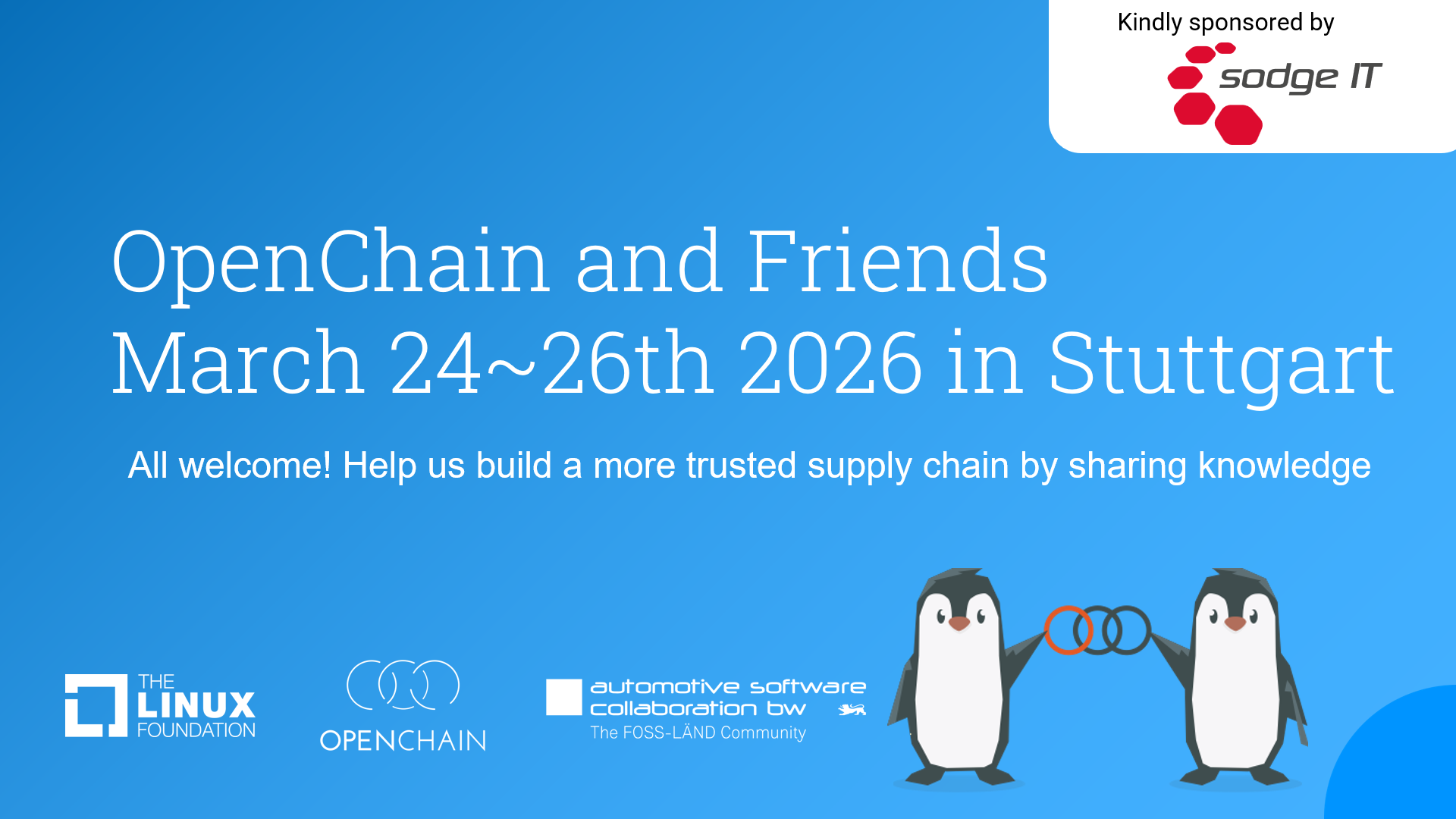

About the OpenChain Project:

The OpenChain Project has an extensive global community of over 1,000 companies collaborating to make the supply chain quicker, more effective and more efficient. It maintains OpenChain ISO/IEC 5230, the international standard for open source license compliance programs and OpenChain ISO/IEC 18974, the industry standard for open source security assurance programs.

About The Linux Foundation:

The Linux Foundation is the world’s leading home for collaboration on open source software, hardware, standards, and data. Linux Foundation projects are critical to the world’s infrastructure, including Linux, Kubernetes, Node.js, ONAP, PyTorch, RISC-V, SPDX, OpenChain, and more. The Linux Foundation focuses on leveraging best practices and addressing the needs of contributors, users, and solution providers to create sustainable models for open collaboration. For more information, please visit us at linuxfoundation.org.

Check Out The Publicly Announced Community of Conformance:

https://openchainproject.org/community-of-conformance